GCP Associate (GCP-ACE) 자격증 시험 준비를 위해 덤프 문제를 풀어보려고 한다.

지난번 포스팅에 이어서 ExamTopic 사이트 문제를 풀어보겠다.

역시 GCP-ACE 시험은 영어로 봐야 하기 때문에 영어 지문으로 공부를 진행하겠다.

역시나 오답이 많은 것 같으니 꼭 토론이랑 구글 문서를 참고하고 공부해야 한다.

짧은 시간 내에 취득을 원하시는 분에게 오답으로 스트레스 받을 필요 없이 도움이 되면 좋겠다.

11Page

You have 32 GB of data in a single file that you need to upload to a Nearline Storage bucket. The WAN connection you are using is rated at 1 Gbps, and you are the only one on the connection. You want to use as much of the rated 1 Gbps as possible to transfer the file rapidly. How should you upload the file?

A. Use the GCP Console to transfer the file instead of gsutil.

B. Enable parallel composite uploads using gsutil on the file transfer.

C. Decrease the TCP window size on the machine initiating the transfer.

D. Change the storage class of the bucket from Nearline to Multi-Regional.

정답 : B

대역폭이 좋고 단일 파일이기 때문에 gsutil 병렬 복합 업로드를 사용하여 대용량 파일을 분할하고

병렬로 업로드할 수 있음

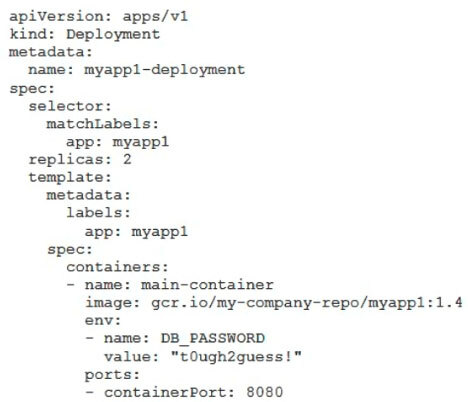

You've deployed a microservice called myapp1 to a Google Kubernetes Engine cluster using the YAML file specified below:

You need to refactor this configuration so that the database password is not stored in plain text. You want to follow Google-recommended practices. What should you do?

A. Store the database password inside the Docker image of the container, not in the YAML file.

B. Store the database password inside a Secret object. Modify the YAML file to populate the DB_PASSWORD environment variable from the Secret.

C. Store the database password inside a ConfigMap object. Modify the YAML file to populate the DB_PASSWORD environment variable from the ConfigMap.

D. Store the database password in a file inside a Kubernetes persistent volume, and use a persistent volume claim to mount the volume to the container.

정답 : B

사이트는 C가 정답이라고 하지만 토론을 보면 B가 압도적

ConfigMap은 비밀번호 및 API 키 용도가 아니기 때문 (비 기밀 데이터 용 - 예 : 포트 번호)

Kubernetes Secrets를 사용하면 비밀번호, OAuth 토큰 및 SSH 키와 같은 민감한 정보를

저장하고 관리할 수 있음

You are running an application on multiple virtual machines within a managed instance group and have autoscaling enabled. The autoscaling policy is configured so that additional instances are added to the group if the CPU utilization of instances goes above 80%. VMs are added until the instance group reaches its maximum limit of five VMs or until CPU utilization of instances lowers to 80%. The initial delay for HTTP health checks against the instances is set to 30 seconds.

The virtual machine instances take around three minutes to become available for users. You observe that when the instance group autoscales, it adds more instances then necessary to support the levels of end-user traffic. You want to properly maintain instance group sizes when autoscaling. What should you do?

A. Set the maximum number of instances to 1.

B. Decrease the maximum number of instances to 3.

C. Use a TCP health check instead of an HTTP health check.

D. Increase the initial delay of the HTTP health check to 200 seconds.

정답 : D

가상 머신 인스턴스를 사용자가 사용할 수 있게 되기까지 3분이 소요되기 때문에

cool down time을 30초에서 늘려야 함

You need to select and configure compute resources for a set of batch processing jobs. These jobs take around 2 hours to complete and are run nightly. You want to minimize service costs. What should you do?

A. Select Google Kubernetes Engine. Use a single-node cluster with a small instance type.

B. Select Google Kubernetes Engine. Use a three-node cluster with micro instance types.

C. Select Compute Engine. Use preemptible VM instances of the appropriate standard machine type.

D. Select Compute Engine. Use VM instance types that support micro bursting.

정답 : C

비용 절감을 위해 Compute Engine을 선택하며, 선점형 VM 인스턴스를 사용한다.

12Page

You recently deployed a new version of an application to App Engine and then discovered a bug in the release. You need to immediately revert to the prior version of the application. What should you do?

A. Run gcloud app restore.

B. On the App Engine page of the GCP Console, select the application that needs to be reverted and click Revert.

C. On the App Engine Versions page of the GCP Console, route 100% of the traffic to the previous version.

D. Deploy the original version as a separate application. Then go to App Engine settings and split traffic between applications so that the original version serves 100% of the requests.

정답 : C

사이트는 D가 정답이라고 하지만, 토론을 보면 C가 압도적

GCP 콘솔 App Engine 버전 페이지에서 트래픽의 100%를 이전 버전으로 라우팅할 수 있음

You deployed an App Engine application using gcloud app deploy, but it did not deploy to the intended project. You want to find out why this happened and where the application deployed. What should you do?

A. Check the app.yaml file for your application and check project settings.

B. Check the web-application.xml file for your application and check project settings.

C. Go to Deployment Manager and review settings for deployment of applications.

D. Go to Cloud Shell and run gcloud config list to review the Google Cloud configuration used for deployment.

정답 : A

APP.YAML 파일에서 버전 및 URL을 포함한 앱의 모든 런타임 컨피그를 지정함

YAML 파일에 프로젝트 지정이 되었는지 확인이 필요함

You want to configure 10 Compute Engine instances for availability when maintenance occurs. Your requirements state that these instances should attempt to automatically restart if they crash. Also, the instances should be highly available including during system maintenance. What should you do?

A. Create an instance template for the instances. Set the "˜Automatic Restart' to on. Set the "˜On-host maintenance' to Migrate VM instance. Add the instance template to an instance group.

B. Create an instance template for the instances. Set "˜Automatic Restart' to off. Set "˜On-host maintenance' to Terminate VM instances. Add the instance template to an instance group.

C. Create an instance group for the instances. Set the "˜Autohealing' health check to healthy (HTTP).

D. Create an instance group for the instance. Verify that the "˜Advanced creation options' setting for "˜do not retry machine creation' is set to off.

정답 : A

사이트는 B가 정답이라고 하지만 토론을 보면 A가 압도적

라이브 마이그레이션을 사용하도록 인스턴스의 가용성 정책을 구성한 경우

Compute Engine은 VM 인스턴스를 마이그레이션 하는데,

이러면 이벤트 중에 애플리케이션이 중단되는 것을 방지하여 가용성을 높일 수 있음

You host a static website on Cloud Storage. Recently, you began to include links to PDF files on this site. Currently, when users click on the links to these PDF files, their browsers prompt them to save the file onto their local system. Instead, you want the clicked PDF files to be displayed within the browser window directly, without prompting the user to save the file locally. What should you do?

A. Enable Cloud CDN on the website frontend.

B. Enable "˜Share publicly' on the PDF file objects.

C. Set Content-Type metadata to application/pdf on the PDF file objects.

D. Add a label to the storage bucket with a key of Content-Type and value of application/pdf.

정답 : C

PDF 파일 개체에서 Content-Type 메타 데이터를 application/pds로 설정해야 함

13Page

You have a virtual machine that is currently configured with 2 vCPUs and 4 GB of memory. It is running out of memory. You want to upgrade the virtual machine to have 8 GB of memory. What should you do?

A. Rely on live migration to move the workload to a machine with more memory.

B. Use gcloud to add metadata to the VM. Set the key to required-memory-size and the value to 8 GB.

C. Stop the VM, change the machine type to n1-standard-8, and start the VM.

D. Stop the VM, increase the memory to 8 GB, and start the VM.

정답 : D

n1-standard-8은 8GB 메모리가 아니라 8개의 CPU 사용을 의미함

VM 인스턴스를 중지하지 않고 메모리 증설은 불가함

You have production and test workloads that you want to deploy on Compute Engine. Production VMs need to be in a different subnet than the test VMs. All the

VMs must be able to reach each other over Internal IP without creating additional routes. You need to set up VPC and the 2 subnets. Which configuration meets these requirements?

A. Create a single custom VPC with 2 subnets. Create each subnet in a different region and with a different CIDR range.

B. Create a single custom VPC with 2 subnets. Create each subnet in the same region and with the same CIDR range.

C. Create 2 custom VPCs, each with a single subnet. Create each subnet in a different region and with a different CIDR range.

D. Create 2 custom VPCs, each with a single subnet. Create each subnet in the same region and with the same CIDR range.

정답 : A

동일한 지역에서 같은 CIDR IP 범위를 가질 수 없기 때문에 B는 답이 아님

서브넷의 기본 및 보조 범위는 할당 된 범위, 동일한 네트워크에 있는

다른 서브넷의 기본 또는 보조 범위와 겹칠 수 없음.

또한, VPC는 본질적으로 글로벌이며, 서브넷은 지역적이지만 전 세계적으로 연결됨

그래서 서로 다른 서브넷에 대해 1개의 VPC와 서로 다른 CIDR을 만드는 것이 목적으로 충분함

You need to create an autoscaling managed instance group for an HTTPS web application. You want to make sure that unhealthy VMs are recreated. What should you do?

A. Create a health check on port 443 and use that when creating the Managed Instance Group.

B. Select Multi-Zone instead of Single-Zone when creating the Managed Instance Group.

C. In the Instance Template, add the label "˜health-check'.

D. In the Instance Template, add a startup script that sends a heartbeat to the metadata server.

정답 : A

사이트는 C가 정답이라고 하지만, 토론을 보면 A가 압도적으로 정답이라고 함

상태 확인은 인스턴스 템플릿이 아닌 즉각적인 그룹 생성이기 때문에 C, D는 오답

MIG를 생성하는 동안 자동 복구를 설정하고 포트 443을 추가해야 함

Your company has a Google Cloud Platform project that uses BigQuery for data warehousing. Your data science team changes frequently and has few members.

You need to allow members of this team to perform queries. You want to follow Google-recommended practices. What should you do?

A. 1. Create an IAM entry for each data scientist's user account. 2. Assign the BigQuery jobUser role to the group.

B. 1. Create an IAM entry for each data scientist's user account. 2. Assign the BigQuery dataViewer user role to the group.

C. 1. Create a dedicated Google group in Cloud Identity. 2. Add each data scientist's user account to the group. 3. Assign the BigQuery jobUser role to the group.

D. 1. Create a dedicated Google group in Cloud Identity. 2. Add each data scientist's user account to the group. 3. Assign the BigQuery dataViewer user role to the group.

정답 : C

사이트는 D가 정답이라고 하지만 토론을 보면 C가 압도적

dataViewer는 사용자가 뷰에서 쿼리를 수행하고 데이터를 가져올 수 없어 B, D는 오답

14Page

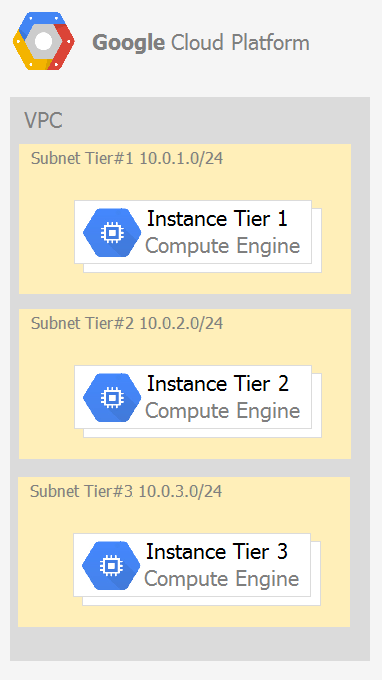

Your company has a 3-tier solution running on Compute Engine. The configuration of the current infrastructure is shown below.

Each tier has a service account that is associated with all instances within it. You need to enable communication on TCP port 8080 between tiers as follows: "¢ Instances in tier #1 must communicate with tier #2. "¢ Instances in tier #2 must communicate with tier #3.

What should you do?

A. 1. Create an ingress firewall rule with the following settings: "¢ Targets: all instances "¢ Source filter: IP ranges (with the range set to 10.0.2.0/24) "¢ Protocols: allow all 2. Create an ingress firewall rule with the following settings: "¢ Targets: all instances "¢ Source filter: IP ranges (with the range set to 10.0.1.0/24) "¢ Protocols: allow all

B. 1. Create an ingress firewall rule with the following settings: "¢ Targets: all instances with tier #2 service account "¢ Source filter: all instances with tier #1 service account "¢ Protocols: allow TCP:8080 2. Create an ingress firewall rule with the following settings: "¢ Targets: all instances with tier #3 service account "¢ Source filter: all instances with tier #2 service account "¢ Protocols: allow TCP: 8080

C. 1. Create an ingress firewall rule with the following settings: "¢ Targets: all instances with tier #2 service account "¢ Source filter: all instances with tier #1 service account "¢ Protocols: allow all 2. Create an ingress firewall rule with the following settings: "¢ Targets: all instances with tier #3 service account "¢ Source filter: all instances with tier #2 service account "¢ Protocols: allow all

D. 1. Create an egress firewall rule with the following settings: "¢ Targets: all instances "¢ Source filter: IP ranges (with the range set to 10.0.2.0/24) "¢ Protocols: allow TCP: 8080 2. Create an egress firewall rule with the following settings: "¢ Targets: all instances "¢ Source filter: IP ranges (with the range set to 10.0.1.0/24) "¢ Protocols: allow TCP: 8080

정답 : B

첫 번째, VM1는 소스, VM2는 타겟으로 통신해야 돼서 VM1에게 VM2의 입구(Ingress)를 허용

두 번째, VM2는 소스, VM3는 타겟으로 통신해야 돼서 VM2에게 VM3의 입구(Ingress)를 허용

You are given a project with a single virtual private cloud (VPC) and a single subnetwork in the us-central1 region. There is a Compute Engine instance hosting an application in this subnetwork. You need to deploy a new instance in the same project in the europe-west1 region. This new instance needs access to the application. You want to follow Google-recommended practices. What should you do?

A. 1. Create a subnetwork in the same VPC, in europe-west1. 2. Create the new instance in the new subnetwork and use the first instance's private address as the endpoint.

B. 1. Create a VPC and a subnetwork in europe-west1. 2. Expose the application with an internal load balancer. 3. Create the new instance in the new subnetwork and use the load balancer's address as the endpoint.

C. 1. Create a subnetwork in the same VPC, in europe-west1. 2. Use Cloud VPN to connect the two subnetworks. 3. Create the new instance in the new subnetwork and use the first instance's private address as the endpoint.

D. 1. Create a VPC and a subnetwork in europe-west1. 2. Peer the 2 VPCs. 3. Create the new instance in the new subnetwork and use the first instance's private address as the endpoint.

정답 : A

B가 아닌 이유는 IP가 변경되고 연결이 끊어지는 이유로 인스턴스 1이 죽는 것으로 보이며

내부 로드 밸런서를 추가하는 것은 필요 없는 비용이 추가됨

C는 Cloud VPN 연결을 위해 주소를 예약해야 돼서 예약된 IP와 사용된 리소스에 대한 비용 지불이 필요하기 때문

Your projects incurred more costs than you expected last month. Your research reveals that a development GKE container emitted a huge number of logs, which resulted in higher costs. You want to disable the logs quickly using the minimum number of steps. What should you do?

A. 1. Go to the Logs ingestion window in Stackdriver Logging, and disable the log source for the GKE container resource.

B. 1. Go to the Logs ingestion window in Stackdriver Logging, and disable the log source for the GKE Cluster Operations resource.

C. 1. Go to the GKE console, and delete existing clusters. 2. Recreate a new cluster. 3. Clear the option to enable legacy Stackdriver Logging.

D. 1. Go to the GKE console, and delete existing clusters. 2. Recreate a new cluster. 3. Clear the option to enable legacy Stackdriver Monitoring.

정답 : A

리소스 유형이 쿠버네티스 컨테이너이므로 A가 정답임

You have a website hosted on App Engine standard environment. You want 1% of your users to see a new test version of the website. You want to minimize complexity. What should you do?

A. Deploy the new version in the same application and use the --migrate option.

B. Deploy the new version in the same application and use the --splits option to give a weight of 99 to the current version and a weight of 1 to the new version.

C. Create a new App Engine application in the same project. Deploy the new version in that application. Use the App Engine library to proxy 1% of the requests to the new version.

D. Create a new App Engine application in the same project. Deploy the new version in that application. Configure your network load balancer to send 1% of the traffic to that new application.

정답 : B

사이트는 C가 정답이라고 하지만, 토론을 보면 B가 압도적

--splits는 각 버전으로 이동해야 하는 트래픽 비율을 설명하는 키-값 쌍임

분할된 값이 함께 더해져 가중치로 사용됨

동일한 프로젝트에서 2개의 App Engine을 실행할 수 없기 때문에 C, D는 오답

15Page

You have a web application deployed as a managed instance group. You have a new version of the application to gradually deploy. Your web application is currently receiving live web traffic. You want to ensure that the available capacity does not decrease during the deployment. What should you do?

A. Perform a rolling-action start-update with maxSurge set to 0 and maxUnavailable set to 1.

B. Perform a rolling-action start-update with maxSurge set to 1 and maxUnavailable set to 0.

C. Create a new managed instance group with an updated instance template. Add the group to the backend service for the load balancer. When all instances in the new managed instance group are healthy, delete the old managed instance group.

D. Create a new instance template with the new application version. Update the existing managed instance group with the new instance template. Delete the instances in the managed instance group to allow the managed instance group to recreate the instance using the new instance template.

정답 : B

maxSurge = 업데이트 프로세스 중에 만들 수 있는 최대 추가 인스턴스 수

maxUnavailable = 업데이트 프로세스 중에 사용할 수 없는 최대 인스턴스 수

전역 용량이 그대로 유지되도록 해야 하기 때문에 maxUnavailable을 0으로 설정해야 함

반면 새 인스턴스를 만들 수 있는지 확인이 필요해서 maxSurge를 1로 설정함

C는 비싸고 설정하기 어렵고, D는 글로벌 용량을 그대로 유지하지 않아서 오답

You are building an application that stores relational data from users. Users across the globe will use this application. Your CTO is concerned about the scaling requirements because the size of the user base is unknown. You need to implement a database solution that can scale with your user growth with minimum configuration changes. Which storage solution should you use?

A. Cloud SQL

B. Cloud Spanner

C. Cloud Firestore

D. Cloud Datastore

정답 : B

사이트는 D가 답이라고 되어 있으나 토론을 보면 B가 압도적

우선 관계형 데이터베이스는 Cloud SQL과 Cloud Spanner이며

Cloud SQL은 Spanner 만큼 확장 가능하지 않고 글로벌 트래픽에도 적합하지 않음

Cloud DataStore는 NoSQL, 스키마 없는 데이터베이스를 사용하기 때문에 오답

You are the organization and billing administrator for your company. The engineering team has the Project Creator role on the organization. You do not want the engineering team to be able to link projects to the billing account. Only the finance team should be able to link a project to a billing account, but they should not be able to make any other changes to projects. What should you do?

A. Assign the finance team only the Billing Account User role on the billing account.

B. Assign the engineering team only the Billing Account User role on the billing account.

C. Assign the finance team the Billing Account User role on the billing account and the Project Billing Manager role on the organization.

D. Assign the engineering team the Billing Account User role on the billing account and the Project Billing Manager role on the organization.

정답 : C

사이트는 D가 정답이라고 하지만 토론을 보면 A와 C의 의견이 분분하다. 본인은 C라고 판단됨

결제 계정 사용자는 액세스 권한이 있는 결제 계정에만 프로젝트를 연결할 수 있으며

재무 팀은 프로젝트 청구 관리자 역할도 가져야 해당 프로젝트에 액세스할 수 있음

You have an application running in Google Kubernetes Engine (GKE) with cluster autoscaling enabled. The application exposes a TCP endpoint. There are several replicas of this application. You have a Compute Engine instance in the same region, but in another Virtual Private Cloud (VPC), called gce-network, that has no overlapping IP ranges with the first VPC. This instance needs to connect to the application on GKE. You want to minimize effort. What should you do?

A. 1. In GKE, create a Service of type LoadBalancer that uses the application's Pods as backend. 2. Set the service's externalTrafficPolicy to Cluster. 3. Configure the Compute Engine instance to use the address of the load balancer that has been created.

B. 1. In GKE, create a Service of type NodePort that uses the application's Pods as backend. 2. Create a Compute Engine instance called proxy with 2 network interfaces, one in each VPC. 3. Use iptables on this instance to forward traffic from gce-network to the GKE nodes. 4. Configure the Compute Engine instance to use the address of proxy in gce-network as endpoint.

C. 1. In GKE, create a Service of type LoadBalancer that uses the application's Pods as backend. 2. Add an annotation to this service: cloud.google.com/load-balancer-type: Internal 3. Peer the two VPCs together. 4. Configure the Compute Engine instance to use the address of the load balancer that has been created.

D. 1. In GKE, create a Service of type LoadBalancer that uses the application's Pods as backend. 2. Add a Cloud Armor Security Policy to the load balancer that whitelists the internal IPs of the MIG's instances. 3. Configure the Compute Engine instance to use the address of the load balancer that has been created.

정답 : C

사이트는 A가 정답이라고 하지만, 토론을 보면 C가 압도적

둘 다 다른 VPC에 있기 때문에 VPC 피어링이 필요하며

A는 최소한의 노력이지만, 애플리케이션을 인터넷에 노출해야 되므로 추천하지 않는 방식

16Page

Your organization is a financial company that needs to store audit log files for 3 years. Your organization has hundreds of Google Cloud projects. You need to implement a cost-effective approach for log file retention. What should you do?

A. Create an export to the sink that saves logs from Cloud Audit to BigQuery.

B. Create an export to the sink that saves logs from Cloud Audit to a Coldline Storage bucket.

C. Write a custom script that uses logging API to copy the logs from Stackdriver logs to BigQuery.

D. Export these logs to Cloud Pub/Sub and write a Cloud Dataflow pipeline to store logs to Cloud SQL.

정답 : B

사이트는 A가 정답이라고 하지만 토론을 보면 B가 압도적

수백 개 프로젝트 로그 관리 및 3년 보관 기준을 봤을 때 모범 사례 및

비용 효율적인 것은 Coldline Storage인 B가 정답임

모범 사례 : Stackdriver Logging에서 Cloud Storage로 로그 전달 방법을 보면 60일 뒤 Nearline으로 변경, 120일 뒤 Coldline으로 변경 뒤 2,555일(약 7년) 후 로그를 삭제하는 규칙을 권장함

또한, 금융 서비스 회사에서 PCI-DSS 요구 사항에 의해 로그를 일정 보관하기 위해 비용 효율적인 접근 방식으로 검토하는 것이 포인트

You want to run a single caching HTTP reverse proxy on GCP for a latency-sensitive website. This specific reverse proxy consumes almost no CPU. You want to have a 30-GB in-memory cache, and need an additional 2 GB of memory for the rest of the processes. You want to minimize cost. How should you run this reverse proxy?

A. Create a Cloud Memorystore for Redis instance with 32-GB capacity.

B. Run it on Compute Engine, and choose a custom instance type with 6 vCPUs and 32 GB of memory.

C. Package it in a container image, and run it on Kubernetes Engine, using n1-standard-32 instances as nodes.

D. Run it on Compute Engine, choose the instance type n1-standard-1, and add an SSD persistent disk of 32 GB.

정답 : A

비용만 생각하면 B가 정답이나, 질문이 대기 시간에 민감한 웹 사이트이기 때문에

Redis가 답이라고 판단됨

Redis 용 Memorystore는 빠른 실시간 데이터 처리가 필요한 사용 사례를 위해

빠른 메모리 내 저장소를 제공함

You are hosting an application on bare-metal servers in your own data center. The application needs access to Cloud Storage. However, security policies prevent the servers hosting the application from having public IP addresses or access to the internet. You want to follow Google-recommended practices to provide the application with access to Cloud Storage. What should you do?

A. 1. Use nslookup to get the IP address for storage.googleapis.com. 2. Negotiate with the security team to be able to give a public IP address to the servers. 3. Only allow egress traffic from those servers to the IP addresses for storage.googleapis.com.

B. 1. Using Cloud VPN, create a VPN tunnel to a Virtual Private Cloud (VPC) in Google Cloud Platform (GCP). 2. In this VPC, create a Compute Engine instance and install the Squid proxy server on this instance. 3. Configure your servers to use that instance as a proxy to access Cloud Storage.

C. 1. Use Migrate for Compute Engine (formerly known as Velostrata) to migrate those servers to Compute Engine. 2. Create an internal load balancer (ILB) that uses storage.googleapis.com as backend. 3. Configure your new instances to use this ILB as proxy.

D. 1. Using Cloud VPN or Interconnect, create a tunnel to a VPC in GCP. 2. Use Cloud Router to create a custom route advertisement for 199.36.153.4/30. Announce that network to your on-premises network through the VPN tunnel. 3. In your on-premises network, configure your DNS server to resolve *.googleapis.com as a CNAME to restricted.googleapis.com.

정답 : D

온 프레미스 호스트의 비공개 구글 액세스 구성에 따라 D가 정답

https://cloud.google.com/vpc/docs/configure-private-google-access-hybrid

You want to deploy an application on Cloud Run that processes messages from a Cloud Pub/Sub topic. You want to follow Google-recommended practices. What should you do?

A. 1. Create a Cloud Function that uses a Cloud Pub/Sub trigger on that topic. 2. Call your application on Cloud Run from the Cloud Function for every message.

B. 1. Grant the Pub/Sub Subscriber role to the service account used by Cloud Run. 2. Create a Cloud Pub/Sub subscription for that topic. 3. Make your application pull messages from that subscription.

C. 1. Create a service account. 2. Give the Cloud Run Invoker role to that service account for your Cloud Run application. 3. Create a Cloud Pub/Sub subscription that uses that service account and uses your Cloud Run application as the push endpoint.

D. 1. Deploy your application on Cloud Run on GKE with the connectivity set to Internal. 2. Create a Cloud Pub/Sub subscription for that topic. 3. In the same Google Kubernetes Engine cluster as your application, deploy a container that takes the messages and sends them to your application.

정답 : C

사이트는 D가 정답이라고 하지만, 토론을 보면 B, C로 분분하나 C가 조금 더 많음

아래 설명에 따르면 C가 정답

17Page

You need to deploy an application, which is packaged in a container image, in a new project. The application exposes an HTTP endpoint and receives very few requests per day. You want to minimize costs. What should you do?

A. Deploy the container on Cloud Run.

B. Deploy the container on Cloud Run on GKE.

C. Deploy the container on App Engine Flexible.

D. Deploy the container on Google Kubernetes Engine, with cluster autoscaling and horizontal pod autoscaling enabled.

정답 : A

CloudRun 서비스는 HTTP 엔드 포인트를 노출하며, 하루에 거의 요청이 없기 때문에 GKE까지는 필요 없음

또한, CloudRun은 트래픽에 따라 즉시 0에서 자동으로 확장 및 축소되며

사용하는 정확한 리소스에 대해서만 비용을 청구함

Your company has an existing GCP organization with hundreds of projects and a billing account. Your company recently acquired another company that also has hundreds of projects and its own billing account. You would like to consolidate all GCP costs of both GCP organizations onto a single invoice. You would like to consolidate all costs as of tomorrow. What should you do?

A. Link the acquired company's projects to your company's billing account.

B. Configure the acquired company's billing account and your company's billing account to export the billing data into the same BigQuery dataset.

C. Migrate the acquired company's projects into your company's GCP organization. Link the migrated projects to your company's billing account.

D. Create a new GCP organization and a new billing account. Migrate the acquired company's projects and your company's projects into the new GCP organization and link the projects to the new billing account.

정답 : C

사이트는 D가 정답이라고 하고 토론을 보면 의견이 분분한데, 본인은 C가 답이라 생각함

획득한 조직에 연결된 조직이 없기 때문에

특히 아래 URL을 참고하면 C가 정답이라고 판단됨

https://cloud.google.com/resource-manager/docs/migrating-projects-billing

하지만 100개 프로젝트를 이동하는 것은 굉장히 번거로운 작업이며

프로젝트는 인수한 회사의 다른 조직에도 연결되어 있어서 마이그레이션을 위해

구글 클라우드 지원이 필요할 수도 있는 점 때문에 A도 고민되긴 함

특히 다음날부터 바로 요금이 통합되길 원했음

You built an application on Google Cloud Platform that uses Cloud Spanner. Your support team needs to monitor the environment but should not have access to table data. You need a streamlined solution to grant the correct permissions to your support team, and you want to follow Google-recommended practices. What should you do?

A. Add the support team group to the roles/monitoring.viewer role

B. Add the support team group to the roles/spanner.databaseUser role.

C. Add the support team group to the roles/spanner.databaseReader role.

D. Add the support team group to the roles/stackdriver.accounts.viewer role.

정답 : A

사이트는 B가 정답이라고 하지만 토론을 보면 A가 압도적

Spanner 역할은 사용 사례와 관련 없고, 팀은 환경을 모니터링하기 위한 액세스 권한만 있으면 됨

roles.monitoring.viewer 권한이면 GCP 콘솔 및 API 참조에서 모든 것을 모니터링할 수 있음

For analysis purposes, you need to send all the logs from all of your Compute Engine instances to a BigQuery dataset called platform-logs. You have already installed the Stackdriver Logging agent on all the instances. You want to minimize cost. What should you do?

A. 1. Give the BigQuery Data Editor role on the platform-logs dataset to the service accounts used by your instances. 2. Update your instances' metadata to add the following value: logs-destination: bq://platform-logs.

B. 1. In Stackdriver Logging, create a logs export with a Cloud Pub/Sub topic called logs as a sink. 2. Create a Cloud Function that is triggered by messages in the logs topic. 3. Configure that Cloud Function to drop logs that are not from Compute Engine and to insert Compute Engine logs in the platform-logs dataset.

C. 1. In Stackdriver Logging, create a filter to view only Compute Engine logs. 2. Click Create Export. 3. Choose BigQuery as Sink Service, and the platform-logs dataset as Sink Destination.

D. 1. Create a Cloud Function that has the BigQuery User role on the platform-logs dataset. 2. Configure this Cloud Function to create a BigQuery Job that executes this query: INSERT INTO dataset.platform-logs (timestamp, log) SELECT timestamp, log FROM compute.logs WHERE timestamp > DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY) 3. Use Cloud Scheduler to trigger this Cloud Function once a day.

정답 : C

가장 심플하고 비용 효율적인 방법

18Page

You are using Deployment Manager to create a Google Kubernetes Engine cluster. Using the same Deployment Manager deployment, you also want to create a

DaemonSet in the kube-system namespace of the cluster. You want a solution that uses the fewest possible services. What should you do?

A. Add the cluster's API as a new Type Provider in Deployment Manager, and use the new type to create the DaemonSet.

B. Use the Deployment Manager Runtime Configurator to create a new Config resource that contains the DaemonSet definition.

C. With Deployment Manager, create a Compute Engine instance with a startup script that uses kubectl to create the DaemonSet.

D. In the cluster's definition in Deployment Manager, add a metadata that has kube-system as key and the DaemonSet manifest as value.

정답 : A

사이트는 C가 정답이라고 하지만 토론을 보면 A가 많음

C, A 둘 다 가능하지만, 가능한 적은 서비스를 사용하는 솔루션을 원하기 때문에 A가 정답

C는 이미 추가가 아닌 경우 GCE 서비스 활성화가 필요함

A의 경우 GKE 노드를 구성하여 런타임 구성자에 상태를 보고 할 수 있으며

작동 중일 때 작업을 실행하여 DaemonSet을 만들 수 있음

You are building an application that will run in your data center. The application will use Google Cloud Platform (GCP) services like AutoML. You created a service account that has appropriate access to AutoML. You need to enable authentication to the APIs from your on-premises environment. What should you do?

A. Use service account credentials in your on-premises application.

B. Use gcloud to create a key file for the service account that has appropriate permissions.

C. Set up direct interconnect between your data center and Google Cloud Platform to enable authentication for your on-premises applications.

D. Go to the IAM & admin console, grant a user account permissions similar to the service account permissions, and use this user account for authentication from your data center.

정답 : B

다른 플랫폼이나 온 프레미스와 같이 구글 클라우드 외부의 서비스 계정을 사용하려면

먼저 서비스 계정의 ID를 설정해야 하며, 공개/개인 키 쌍이 안전한 방법을 제공해야 함

You are using Container Registry to centrally store your company's container images in a separate project. In another project, you want to create a Google

Kubernetes Engine (GKE) cluster. You want to ensure that Kubernetes can download images from Container Registry. What should you do?

A. In the project where the images are stored, grant the Storage Object Viewer IAM role to the service account used by the Kubernetes nodes.

B. When you create the GKE cluster, choose the Allow full access to all Cloud APIs option under "˜Access scopes'.

C. Create a service account, and give it access to Cloud Storage. Create a P12 key for this service account and use it as an imagePullSecrets in Kubernetes.

D. Configure the ACLs on each image in Cloud Storage to give read-only access to the default Compute Engine service account.

정답 : A

사이트는 B가 정답이라고 하지만 토론을 보면 A가 압도적

Container Registry는 Cloud Storage 버킷을 컨테니어 이미지의 기본 저장소로 사용하며

사용자, 그룹, 서비스 계정 또는 기타 ID에 적절한 Cloud Storage 권한을 부여하여

이미지에 대한 액세스를 제어함

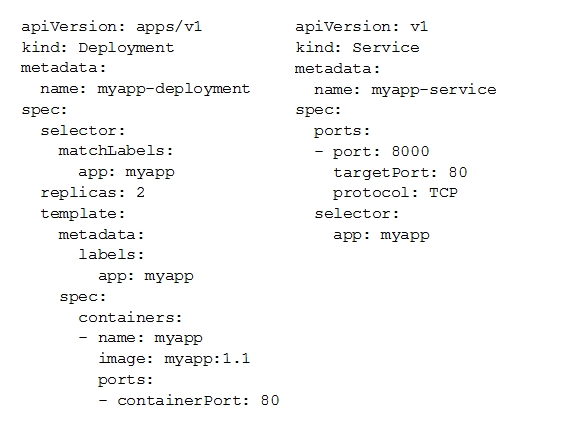

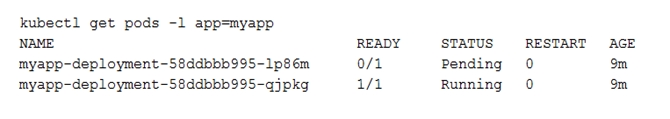

You deployed a new application inside your Google Kubernetes Engine cluster using the YAML file specified below.

You check the status of the deployed pods and notice that one of them is still in PENDING status:

You want to find out why the pod is stuck in pending status. What should you do?

A. Review details of the myapp-service Service object and check for error messages.

B. Review details of the myapp-deployment Deployment object and check for error messages.

C. Review details of myapp-deployment-58ddbbb995-lp86m Pod and check for warning messages.

D. View logs of the container in myapp-deployment-58ddbbb995-lp86m pod and check for warning messages.

정답 : C

pod가 보류 상태가 되면 pod 정보를 확인해야 함

pod가 충돌하거나 실행 중이지만 아무것도 수행하지 않는 경우 컨테이너 로그 확인 필요

만약, pod가 보류 상태의 경우는 컨테이너 로그를 볼 수 없음

19Page

You are setting up a Windows VM on Compute Engine and want to make sure you can log in to the VM via RDP. What should you do?

A. After the VM has been created, use your Google Account credentials to log in into the VM.

B. After the VM has been created, use gcloud compute reset-windows-password to retrieve the login credentials for the VM.

C. When creating the VM, add metadata to the instance using "˜windows-password' as the key and a password as the value.

D. After the VM has been created, download the JSON private key for the default Compute Engine service account. Use the credentials in the JSON file to log in to the VM.

정답 : B

사이트는 D가 정답이라고 하지만 토론을 보면 B가 압도적

VM 생성 후 gcloud compute reset-windows-password를 사용하여

VM의 로그인 사용자 인증 정보를 검색

사용자가 윈도우 가상 머신 인스턴스의 비밀번호를 재 설정하고 검색할 수 있으며

윈도우 계정이 없는 경우 이 명령을 사용하면 계정이 생성되고 해당 새 계정의 암호가 반환됨

You want to configure an SSH connection to a single Compute Engine instance for users in the dev1 group. This instance is the only resource in this particular

Google Cloud Platform project that the dev1 users should be able to connect to. What should you do?

A. Set metadata to enable-oslogin=true for the instance. Grant the dev1 group the compute.osLogin role. Direct them to use the Cloud Shell to ssh to that instance.

B. Set metadata to enable-oslogin=true for the instance. Set the service account to no service account for that instance. Direct them to use the Cloud Shell to ssh to that instance.

C. Enable block project wide keys for the instance. Generate an SSH key for each user in the dev1 group. Distribute the keys to dev1 users and direct them to use their third-party tools to connect.

D. Enable block project wide keys for the instance. Generate an SSH key and associate the key with that instance. Distribute the key to dev1 users and direct them to use their third-party tools to connect.

정답 : A

사이트는 D가 정답이라고 하지만 토론을 보면 A가 압도적

개인 키를 배포하거나 다른 사람을 위해 SSH 키를 생성하면 안 되므로 C, D는 오답

B는 인스턴스에 SSH가 필요한 개발 그룹과 관련이 없는 서비스 계정에 대한 부분이라 오답

You need to produce a list of the enabled Google Cloud Platform APIs for a GCP project using the gcloud command line in the Cloud Shell. The project name is my-project. What should you do?

A. Run gcloud projects list to get the project ID, and then run gcloud services list --project <project ID>.

B. Run gcloud init to set the current project to my-project, and then run gcloud services list --available.

C. Run gcloud info to view the account value, and then run gcloud services list --account <Account>.

D. Run gcloud projects describe <project ID> to verify the project value, and then run gcloud services list --available.

정답 : A

gcloud 로그인 후 명령어를 실행하고 cloud init 및 gcloud 서비스 목록을 실행하는 것은 의미 없음

You are building a new version of an application hosted in an App Engine environment. You want to test the new version with 1% of users before you completely switch your application over to the new version. What should you do?

A. Deploy a new version of your application in Google Kubernetes Engine instead of App Engine and then use GCP Console to split traffic.

B. Deploy a new version of your application in a Compute Engine instance instead of App Engine and then use GCP Console to split traffic.

C. Deploy a new version as a separate app in App Engine. Then configure App Engine using GCP Console to split traffic between the two apps.

D. Deploy a new version of your application in App Engine. Then go to App Engine settings in GCP Console and split traffic between the current version and newly deployed versions accordingly.

정답 : D

사이트는 A가 정답이라고 하지만 토론을 보면 D가 압도적

App Engine을 사용하므로 A, B는 오답이며 (또한 GKE, CE 사용은 비효율적)

프로젝트 당 하나의 App Engine 앱만 가질 수 있기 때문에 C는 오답

또한, 새로운 인스턴스를 생성하는 것은 복잡함

20Page

You need to provide a cost estimate for a Kubernetes cluster using the GCP pricing calculator for Kubernetes. Your workload requires high IOPs, and you will also be using disk snapshots. You start by entering the number of nodes, average hours, and average days. What should you do next?

A. Fill in local SSD. Fill in persistent disk storage and snapshot storage.

B. Fill in local SSD. Add estimated cost for cluster management.

C. Select Add GPUs. Fill in persistent disk storage and snapshot storage.

D. Select Add GPUs. Add estimated cost for cluster management.

정답 : A

사이트는 C가 정답이라고 하지만 토론을 보면 A가 압도적

로컬 SSD는 매우 높은 IOPS와 맞은 지연 시간의 블록 스토리지를 제공하는 로컬 솔리드 스테이트 디스크이며 디스크 스냅샷 기능을 제공함

You are using Google Kubernetes Engine with autoscaling enabled to host a new application. You want to expose this new application to the public, using HTTPS on a public IP address. What should you do?

A. Create a Kubernetes Service of type NodePort for your application, and a Kubernetes Ingress to expose this Service via a Cloud Load Balancer.

B. Create a Kubernetes Service of type ClusterIP for your application. Configure the public DNS name of your application using the IP of this Service.

C. Create a Kubernetes Service of type NodePort to expose the application on port 443 of each node of the Kubernetes cluster. Configure the public DNS name of your application with the IP of every node of the cluster to achieve load-balancing.

D. Create a HAProxy pod in the cluster to load-balance the traffic to all the pods of the application. Forward the public traffic to HAProxy with an iptable rule. Configure the DNS name of your application using the public IP of the node HAProxy is running on.

정답 : A

A, D 모두 솔루션이 될 수 있지만 CLB에서 작동하는 수신 객체 설정이 훨씬 간단하여 A가 정답

You need to enable traffic between multiple groups of Compute Engine instances that are currently running two different GCP projects. Each group of Compute

Engine instances is running in its own VPC. What should you do?

A. Verify that both projects are in a GCP Organization. Create a new VPC and add all instances.

B. Verify that both projects are in a GCP Organization. Share the VPC from one project and request that the Compute Engine instances in the other project use this shared VPC.

C. Verify that you are the Project Administrator of both projects. Create two new VPCs and add all instances.

D. Verify that you are the Project Administrator of both projects. Create a new VPC and add all instances.

정답 : B

B, D 모두 가능하지만, 이미 VPC를 사용하기 때문에 VPC를 셰어하는 B가 비용 절감 가능

You want to add a new auditor to a Google Cloud Platform project. The auditor should be allowed to read, but not modify, all project items.

How should you configure the auditor's permissions?

A. Create a custom role with view-only project permissions. Add the user's account to the custom role.

B. Create a custom role with view-only service permissions. Add the user's account to the custom role.

C. Select the built-in IAM project Viewer role. Add the user's account to this role.

D. Select the built-in IAM service Viewer role. Add the user's account to this role.

정답 : C

IAM 프로젝트 뷰어 역할 할당이 적합함